We consider generative models among the most exciting applications of machine learning. This tech has reached a remarkable capacity to synthesize original multimedia content after learning a data distribution.

In this arena, the state-of-the-art has been dominated by a family of models called generative adversarial networks or GANs.

However, GANs are challenged by training instabilities. The latest StyleGAN2-ada mitigates mode collapse arising from overfit discriminators using test time data augmentation.

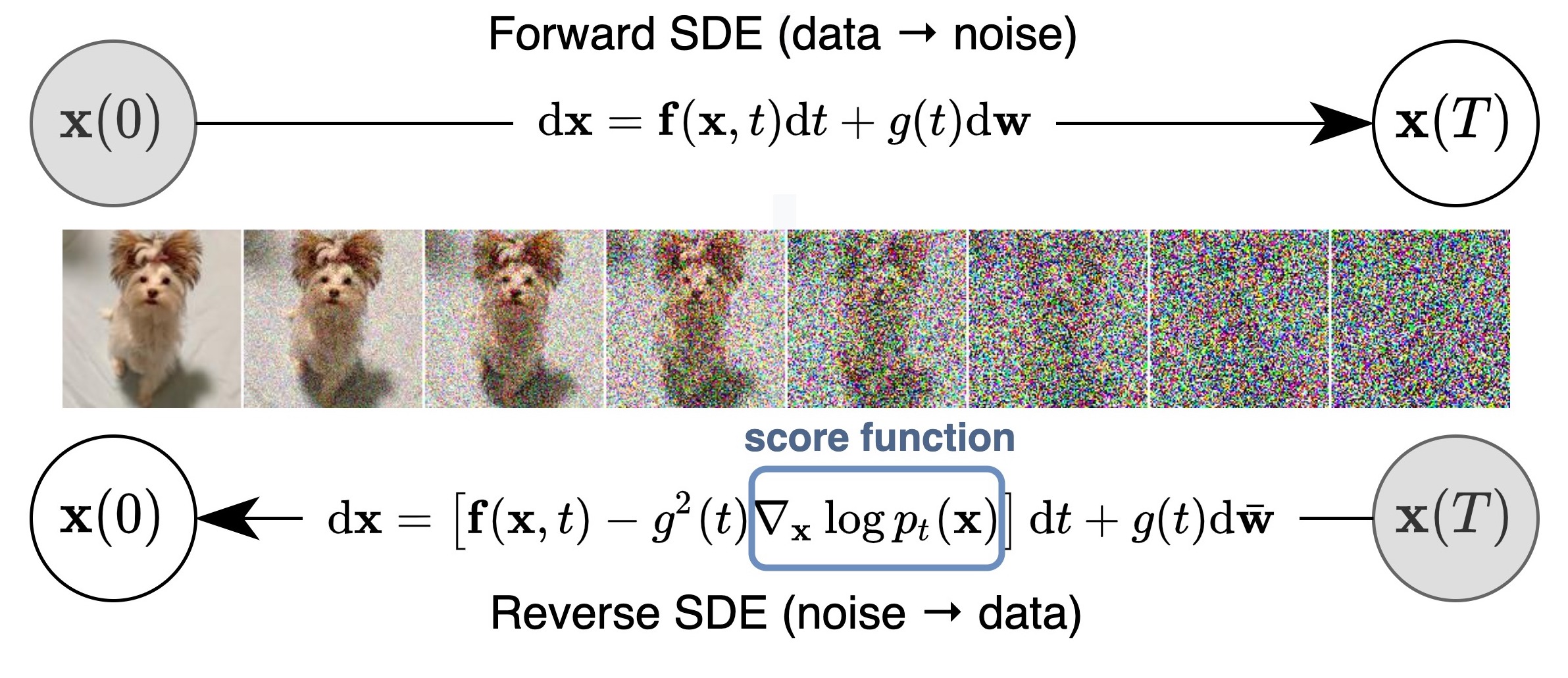

We’ve recently explored another exciting SOTA family of image synthesis techniques called score-based generative models. Under this model, training data undergoes a diffusion process so we can learn the gradient of the data distribution.

$$ \nabla_\mathbf{x} \log p(\mathbf{x}) $$

Now with an estimate for the gradient of the data distribution, we can perturb any point in $R^D$ to one more likely given the training data. This estimate provides a tool for model practitioners to evolve randomly initialized points under the flow prescribed by the learned vector field.

These diffusion processes can be expressed generally using the stochastic differential equation:

$$ \begin{align*} d \mathbf{x} = \mathbf{f}(\mathbf{x}, t) d t + g(t) d \mathbf{w}, \end{align*} $$

Framed this way, sample generation relates to the reverse time dynamics of such diffusion processes. Fortunately, researchers can apply Anderson’s result in stochastic calculus from the 80s to consider this reversal.

Cheaply sampled Gaussian initial conditions are used to generate realistic instances after evolving according to the learned “probability flow”.

Despite apparent similarities to normalizing flows, score-based models avoid the normalization challenge of computing high-dimensional integrals.

In fact, highly-optimized ODE solvers utilize the learned score vector field to generate samples by solving an initial value problem. Researchers also explored various sampling methods to improve the result quality, offering a nice template for extensions.

Aside from generating high-quality samples, score-based models also support exact likelihood computations, class-conditioned sampling and inpainting/colorization applications.

These computations leverage an approximation of the probability flow ODE using the related ideas of neural ODEs. Many of the models made an original debut generating realistic images in Denoising Diffusion Probabilistic Models.

Generating Custom Movie Posters with Score-based Models

The repo trains score models using Jax while making use of scipy ODE solvers to generate samples. The authors offer detailed colabs with pretrained models along with configurations referenced in their ICLR 2021 conference paper.

This makes it easy to generate realistic samples of CIFAR10 categories:

For rough comparison to previous experiments, we start by applying this generative model to a corpus of 40K unlabeled theatrical posters augmented by horizontal reflection. For training, we package the posters into tfrecords using the dataset_tool.py found in the StyleGAN2-ada repo.

Next, we try applying the high resolution configuration configs/ve/celebahq_256_ncsnpp_continuous.py used to generate results from CelebA-HQ to our theatrical poster corpus. This entailed restricting batch sizes to fit the model and training samples into GPU memory. Unfortunately, without shrinking the learning rate, this seemed to destabilize training:

Then, using the smaller model of configs/vp/ddpm/celebahq.py and reducing image resolution, we found samples generated over the course of training like:

Training 100 steps takes approximately 1.5 mins saturating 2 Titan RTXs but less than 15 seconds on 8X larger batches using TPUs!

With a promising start, we scale up training with an order of magnitude more images, including genre labels for class conditional training and generation, we find a qualitative improvement in the images synthesized.

Compared to experiments with StyleGAN2, we find greater variety in qualities like hair, gender, and facial expressions.

Conclusion

Fantastically, by corrupting training samples through a diffusion process, we can learn to approximate the reverse time dynamics using ODE solvers to generate realistic samples from noise!

We’ve even minted a selection of generated posters on OpenSea!

Cascaded Diffusion Models extend this line of work with a sequence of score-based models to progressively sharpen and resolve details of images generated by earlier steps in a cascade.

We’ve tried applying superresolution techniques like ESRGAN as well as first-order motion models to animate our posters in our FilmGeeks: Cinemagraphs collection.

Stay tuned for updates applying StyleGAN3 for image generation and animation!